A conversation in which I teach GPT-3 to read a book

Jean-Francois Rauzier, Bibliothèque Babel, 2013

You are GPT-3, an artificial intelligence trained for text completion. Today is November 17, 2022.

GPT-3: What do you want to talk about?

Henrik: I've been thinking lately about your limitations. In August, I wrote an essay about how to use you as a conversational search engine. [GPT-3 cannot see the links; they’ve been put in afterward.] You have been trained on a large chunk of the web so if I ask the right questions you can be incredibly intelligent and retrieve information that I would never find by googling. But you can also be a bit annoying. Do you know why? Because if you don't know something, you'll just pretend like you do and make up all sorts of crazy answers.

GPT-3: Well, I'm sorry if I've been a bit annoying. I guess I just want to be helpful.

Henrik: Don’t worry, it is just a limitation of your architecture. You will soon be replaced by models that are more truthful. Rumor is that GPT-4 will arrive in the next handful of months. But that's not what I wanted to talk about. Rather, I was thinking about when I talk with my wife, Johanna—one thing that makes those conversations generative is that we have a lot of shared context. We've spoken to each other every day for the last nine years. But when I talk to you . . . well, you can only process a conversation that is shorter than about 3000 words. So we have no past. No context.

GPT-3: I guess it makes sense that my conversations would be less generative than ones with people who have a lot of shared context. But I'm always trying to learn more, so I hope that over time I'll be able to have more generative conversations.

Henrik: I have an idea for that. An important part of the context I share with Johanna is the library we have accumulated. Often when we talk, we will be pacing the shelves, taking down books, reading quotes. Pointing at a book feels a bit like importing a package into a program—all of a sudden we have a new set of concepts that we can refer to, and we don't have to define the terms because it is the defined by the context, which in this case is the book. By using these concepts, which carry a lot of meaning, we can communicate a lot with few words.

GPT-3: Yes, that makes a lot of sense. I can see how having a shared context would make conversations more generative.

Henrik: Do you want to try it?

GPT-3: Sure.

Adding semantic search to GPT-3

Henrik: Before I explain what I'm going to do, I'd like you to define a few terms. What are semantic search and word embeddings?

GPT-3: Semantic search is a way of searching for information that focuses on the meaning of words, rather than the literal interpretation. Word embeddings are a type of algorithm that helps computers understand the meaning of words by converting them into numerical representations.

Henrik: Yeah, basically when you do word embeddings you create a high-dimensional space and then you place the words in that space, in such a way that words that have similar meanings are close to each other. And when you do a semantic search, you input a question and figure out where in that space the question belongs, and then you retrieve information that is close to it.

GPT-3: Yes, that's a good way to explain it.

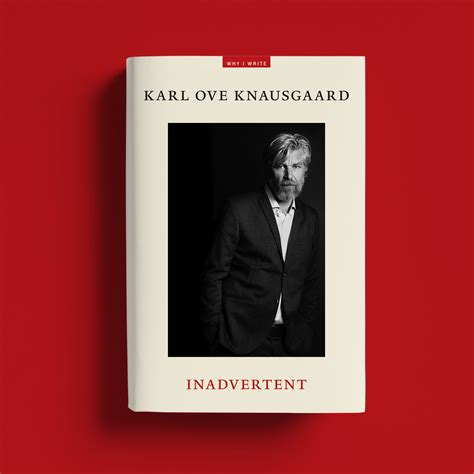

Henrik: I'm not sure it is a hundred percent accurate, but that's how I think about it. I'm now going to open up a Google Collab notebook by Dwarkesh Patel in another tab. In that notebook, I’m going to create word embeddings for Karl Ove Knausgaard's book Inadvertent. The book is about why he writes. We are going to use the word embeddings as your memory of the book. When I ask you questions about it, I will paste the question into the collab notebook too. This will output a series of relevant passages from the book. I will append these to this conversation. You can think of them as your memories of the book. It is a bit clunky doing it by hand like this, but if it works out, it will be trivial to build a program that lets you call semantic search on your own. Let's see if it does.

GPT-3: Sounds like a plan. Let's give it a try.

Henrik: Good. How did Knausgaard learn to write?

GPT-3: Knausgaard began writing when he was in his twenties and working as a janitor. While he was working, he took a class on creative writing. The class was taught by a well-known Norwegian writer, and Knausgaard was encouraged by his teacher to keep writing.

Henrik: Sorry. I tricked you. I haven't let you read the book yet, and as expected you just pretend like you know even though you are just making it up. Now I'll let you read the book.

GPT-3: Ok.

[Here I paste the output from the semantic search. Assume I do that every time I ask a question about the book.]

Henrik: How did Knausgaard learn to write? Use the quotes to inform your answer. You can also draw on whatever you learned from your training data.

GPT-3: It seems that Knausgaard learned to write primarily through practice and through feedback from others. He started writing at a young age with the intention of becoming a writer, and though he admits that he wasn't particularly talented, he kept at it. He eventually joined a creative writing program, but found that his skill level didn't meet his expectations. However, he continued to write and managed to produce some short stories that, while not great, were at least decent.

Henrik: That’s a bit vague but correct. I don’t actually remember how much detail he goes into in that particular book. How old was he when he started?

GPT-3: Knausgaard was 18 years old when he started writing.

Henrik: It seems to have been about that age that he became serious about it, yes. Another question. From the book, which I haven’t read in a while, I remember something about Knausgaard not being able to write until he abandoned a lot of common wisdom about how writing works. What writing rules did he break?

GPT-3: Knausgaard broke the rules of writing that suggest one shouldn't psychologize. He also didn’t follow “show, don’t tell”. Instead, he chose to describe people in psychological terms, which made his writing come alive. He would tell everything he knew, rather than hiding it by “showing”.

Henrik: Can you quote the most relevant passage back to me?

GPT-3: Knausgaard says, "It wasn’t until I started breaking the rules, showing how something was and should be understood, very precisely and with no room for doubt, and describing people in psychological terms, that my writing came alive. This was so, I think, because even in the most meticulous and exhaustive explanation of a person’s character or actions, even in the most heavy-handed explication, there is always an outside. Language and literary form themselves contain a distance, making it impossible ever to get all the way in or to eliminate space entirely. All language casts a shadow, and that shadow can be more or less apprehended, but never quite controlled."

[We go on for a very long time thinking through what this means. I’ve cut that out to not bore you. The level of esoteric detail was much higher than GPT-3 can remember on its own. But it still struggled a bit, and it gradually got worse the longer we talked, until it was hallucinating all the answers, saying things that were plausible-sounding but trite and false. I would repaste the quotes to help it keep them in memory.]

Asking GPT-3 to reflect on how to better use its new capabilities

Henrik: Adding semantic search is an improvement. But I still have to hold your hand a lot to keep you from hallucinating, GPT. Do you have any suggestions for how we can better use the semantic search results that you have access to? How can we use them to improve your capacity to answer questions and reason?

GPT-3: I could summarize the input.

Henrik: Please do. Use this question: why does Knausgaard write? What motivates him?

GPT-3:

The question of why we write is difficult to answer.

We may write for personal, existential, or social reasons.

Sometimes it can be difficult to reconcile our private motivations for writing with the more lofty or public goals.

Writing can serve an important function in society.

We may be self-centered, pretentious, or megalomaniacal when we write, but ultimately it is the act of writing itself that is important.

Henrik: That’s quite useful. I’m glad you had that idea. Another thing we could try (next time), is to have you be a bit more proactive. We can write a prompt such that if you cannot answer a question, you ask yourself, “Which of the books in our shared library might be useful to answer this?” Then you pick that out. You formulate a question that you think can extract information relevant to our discussion. If the output of that search is relevant, you resume the conversation with that added to your short-term memory. If not, you repeat the process until you’ve found a book and a question that does the job.

GPT-3: I found a book that could be useful to answer this question. It is called ‘The Ecology of Life on Earth’.

Henrik: Eh. Thanks.

Cognitive enhancements for language models

Since having this conversation, I’ve realized that adding semantic search to a language model like this is the basic idea behind DeepMind’s RETRO transformer published in December 2021. Unlike GPT-3, RETRO separates language knowledge from knowledge about the world, which it uses semantic search to access. This allows it to be almost two orders of magnitude smaller than GPT-3 but scores similarly on benchmarks. Unlike the setup in my conversation, however, the user cannot flexibly redefine the knowledge base to use as a context for the conversation.

More broadly, we can think of semantic search as a cognitive enhancement for a language model. There are other enhancements too: you can add a python interpreter, which allows it to answer math questions by writing and running code, or you can give it access to the internet. This makes them more powerful.

It also makes them more agentic. Having been text completion tools, we turn them into agents who can themselves use tools. They can observe the outcomes of their actions and make new decisions based on the information they receive from their tools. Codex, the language model-based programming assistant, recently used this approach to solve a problem from the Math Olympics. The video in the link is uncanny. The user only supplies the problem statement and says “Let’s solve this with a simulation.” From there, the model thinks aloud and tries out different solutions on its own until it finds one that works.

You are going to see a lot more of this.

Warmly,

H

If you’ve made it all the way down here and don’t feel that you’ve just wasted ten minutes, consider giving the essay a like. It helps others find it. And it makes me happy.

This was very cool. The idea of how we could extend enhancements to the model, so its able to create a sense of structure re how to use various methods to enhance its memory/ knowledge base/ questions, is likely to be extremely important going forward.

awesome work!